3. K230 AI Demo Overview#

This chapter provides more than 50 AI Demo application examples for the K230, covering multiple modalities, with open-source code and usage documentation. Users can get an overview of the application scenarios of AI Demos through this chapter and experience them on the K230 development board. Additionally, users can refer to the source code to develop application demos based on other scenarios.

Note: For the K230D chip, some demos cannot run due to memory limitations.

3.1 Overview#

The K230 AI Demo integrates modules such as face, human body, hand, license plate, word continuation, voice, DMS, etc., encompassing various functions like classification, detection, segmentation, recognition, tracking, and monocular distance measurement. It provides customers with a reference on how to use the K230 to develop AI-related applications.

3.2 Source Code Location#

The source code is located in the src/reference/ai_poc directory under the root directory of the K230 SDK (k230_sdk/src/reference/ai_poc at main · kendryte/k230_sdk (github.com) or src/reference/ai_poc · kendryte/k230_sdk - Gitee). For an introduction to each demo, please refer to the linked documentation in the table below:

Demo Subdirectory |

Description |

GitHub Link |

Gitee Link |

K230 |

K230D |

Notes |

|---|---|---|---|---|---|---|

anomaly_det |

Anomaly Detection |

√ |

||||

bytetrack |

Multi-Object Tracking |

√ |

√ |

|||

crosswalk_detect |

Crosswalk Detection |

√ |

√ |

|||

dec_ai_enc |

H265 Decoder + AI + H265 Encoder |

√ |

||||

demo_mix |

Demo Mix Collection |

√ |

k230_sdk<=1.3 |

|||

distraction_reminder |

Distraction Reminder |

√ |

√ |

|||

dms_system |

Driver Monitoring System |

√ |

√ |

|||

dynamic_gesture |

Dynamic Gesture Recognition |

√ |

√ |

|||

eye_gaze |

Gaze Estimation |

√ |

||||

face_alignment |

Face Alignment |

√ |

||||

face_detection |

Face Detection |

√ |

√ |

|||

face_emotion |

Facial Emotion Recognition |

√ |

√ |

|||

face_gender |

Gender Classification |

√ |

√ |

|||

face_glasses |

Glasses Classification |

√ |

√ |

|||

face_landmark |

Dense Face Landmarks |

√ |

√ |

|||

face_mask |

Mask Wearing Classification |

√ |

√ |

|||

face_mesh |

3D Face Mesh |

√ |

||||

face_parse |

Face Segmentation |

√ |

√ |

|||

face_pose |

Face Pose Estimation |

√ |

√ |

|||

face_verification |

Face Verification |

√ |

√ |

|||

falldown_detect |

Fall Detection |

√ |

√ |

|||

finger_guessing |

Rock-Paper-Scissors Game |

√ |

√ |

|||

fitness |

Exercise Counting |

√ |

√ |

|||

head_detection |

Head Detection |

√ |

√ |

|||

helmet_detect |

Helmet Detection |

√ |

√ |

|||

kws |

Keyword Wake-up |

√ |

||||

licence_det |

License Plate Detection |

√ |

√ |

|||

licence_det_rec |

License Plate Recognition |

√ |

√ |

|||

llamac |

English Word Continuation |

√ |

Only runs on Linux, not supported by RT-Smart. |

|||

nanotracker |

Single Object Tracking |

√ |

√ |

|||

object_detect_yolov8n |

YOLOV8 Multi-Object Detection |

√ |

√ |

|||

ocr |

OCR Detection + Recognition |

√ |

||||

person_attr |

Human Attributes |

√ |

√ |

|||

person_detect |

Pedestrian Detection |

√ |

√ |

|||

person_distance |

Pedestrian Distance Measurement |

√ |

√ |

|||

pose_detect |

Human Pose Detection |

√ |

√ |

|||

pphumanseg |

Human Segmentation |

√ |

√ |

|||

puzzle_game |

Puzzle Game |

√ |

√ |

|||

segment_yolov8n |

YOLOV8 Multi-Object Segmentation |

√ |

√ |

|||

self_learning |

Self-Learning (Metric Learning Classification) |

√ |

√ |

|||

smoke_detect |

Smoking Detection |

√ |

√ |

|||

space_resize |

Gesture-Based Air Zoom |

√ |

√ |

|||

sq_hand_det |

Palm Detection |

√ |

√ |

|||

sq_handkp_class |

Palm Keypoint Gesture Classification |

√ |

√ |

|||

sq_handkp_det |

Palm Keypoint Detection |

√ |

√ |

|||

sq_handkp_flower |

Fingertip Flower Classification |

√ |

√ |

|||

sq_handkp_ocr |

Fingertip OCR Recognition |

√ |

√ |

|||

sq_handreco |

Gesture Recognition |

√ |

√ |

|||

traffic_light_detect |

Traffic Light Detection |

√ |

√ |

|||

translate_en_ch |

English to Chinese Translation |

√ |

||||

tts_zh |

Chinese Text-to-Speech |

√ |

||||

vehicle_attr |

Vehicle Attribute Recognition |

√ |

√ |

|||

virtual_keyboard |

Virtual Keyboard |

√ |

√ |

|||

yolop_lane_seg |

Lane Segmentation |

√ |

√ |

The paths for kmodel, image, and related dependencies are located at /mnt/src/big/kmodel/ai_poc, with the directory structure as follows:

.

| tts_zh

│ # The images directory contains test images or test data for demo inference

├── images

│ # The kmodel directory contains all the kmodel models required for the demo

├── kmodel

│ # The utils directory contains utility files used for demo inference

└── utils

3.3 Compiling and Running the Program#

This section is based on the latest version of the source code from kendryte/k230_sdk: Kendryte K230 SDK (github.com) or kendryte/k230_sdk - Gitee.

3.3.1. Self-Compiled Image Deployment Process#

Follow the instructions on kendryte/k230_sdk: Kendryte K230 SDK (github.com) or kendryte/k230_sdk - Gitee to build a Docker container and compile the board image;

# Download the Docker compilation image docker pull ghcr.io/kendryte/k230_sdk # Use the following command to confirm if the Docker image was pulled successfully docker images | grep ghcr.io/kendryte/k230_sdk # Download the SDK source code git clone https://github.com/kendryte/k230_sdk.git cd k230_sdk # Download toolchains for Linux and RT-Smart, buildroot package, AI package, etc. make prepare_sourcecode # Create a Docker container, $(pwd):$(pwd) maps the current system directory to the same directory inside the Docker container, and maps the toolchain directory to /opt/toolchain inside the Docker container docker run -u root -it -v $(pwd):$(pwd) -v $(pwd)/toolchain:/opt/toolchain -w $(pwd) ghcr.io/kendryte/k230_sdk /bin/bash make mpp # (1) If you choose to compile the Linux+RT-Smart dual system image yourself, execute the following command make CONF=k230_canmv_defconfig # (2) If you choose to use the Linux+RT-Smart dual system image downloaded from the developer community, be sure to execute the following command in the k230_sdk root directory to specify the development board type make CONF=k230_canmv_defconfig prepare_memory # (3) If you choose to compile a pure RT-Smart system image yourself, execute the following command. The developer community has not yet opened the pure RT-Smart image download make CONF=k230_canmv_only_rtt_defconfig

Please wait patiently for the image to compile successfully.

For Linux+RT-Smart dual system compilation, download the compiled image from k230_sdk root directory/output/k230_canmv_defconfig/images and flash it to the SD card. Refer to Image Flashing for flashing steps:

k230_canmv_defconfig/images ├── big-core ├── little-core ├── sysimage-sdcard.img # SD card boot image └── sysimage-sdcard.img.gz # Compressed SD card boot image

For RT-Smart single system compilation, download the compiled image from k230_sdk root directory/output/k230_canmv_only_rtt_defconfig/images and flash it to the SD card. Refer to Image Flashing for flashing steps:

k230_canmv_defconfig/images ├── big-core ├── sysimage-sdcard.img # SD card boot image └── sysimage-sdcard.img.gz # Compressed SD card boot image

Inside the Docker container, navigate to the k230_sdk root directory and execute the following commands to compile the AI demo part:

cd src/reference/ai_poc # If build_app.sh lacks permissions, execute chmod +x build_app.sh # Ensure that the src/big/kmodel/ai_poc directory already contains the corresponding kmodel, images, and utils before running the script ./build_app.sh # If you want to compile a demo, please use the "build_app.sh demo_subdirectory_name" command, for example: ./build_app.sh face_detection

After executing the build_app.sh script, the compilation products of different demos are in the subdirectories under the k230_bin directory;

Copy the corresponding demo folder to the development board, and execute the sh script on the big core to run the corresponding AI demo;

Note:

For Linux+RT-Smart dual system, sharefs is a shared directory between the big and little cores, allowing the big core to access the little core’s file system by accessing their respective /sharefs directories. Typically, executable programs for the big core are stored in the /sharefs directory, enabling the big core to execute these programs and facilitating application development and debugging on the big core. Refer to: K230 Big and Little Core Communication Sharefs Usage Introduction.

For Linux+RT-Smart dual system, to ensure sufficient system space, the last disk partition size might be insufficient to store all files. You can use the following commands to resize the last partition. Refer to: K230 SDK FAQ Question 9.

umount /sharefs/ parted -l /dev/mmcblk1 # 31.3GB size should be based on the output of the previous command Disk /dev/mmcblk1 parted -a minimal /dev/mmcblk1 resizepart 4 31.3GB mkfs.ext2 /dev/mmcblk1p4 mount /dev/mmcblk1p4 /sharefs

For Linux+RT-Smart dual system, Choose an image from the Canaan Developer Community -> Downloads -> K230 -> Images. Check the SDK and nncase version correspondence via the provided link:

K230 SDK nncase Version Correspondence — K230 Documentation (canaan-creative.com)

Refer to Image Flashing for flashing instructions. The developer community only provides Linux+RT-Smart dual-system images. You need to compile the pure RT-Smart image yourself according to the above steps.

For RT-Smart single system, the root directory identified by the PC when copying files corresponds to the /sdcard directory of the big core. Please search for the copied files in /sdcard under the big core directory.

@You:

The AI Demo sections aim to showcase the powerful performance and wide application scenarios of the K230 in the AI field. Although we provide relevant source code for users to reference, these codes are mostly implementations based on specific demo task scenarios.

For users who wish to gain a deeper understanding of the K230 AI development process, it is recommended to study Quick_Start_K230_AI_Inference_Process and In-depth_Analysis_AI_Development_Process . In these chapters, we provide a detailed explanation of the multimedia applications, AI inference processes, and AI development process based on the K230, offering a comprehensive analysis of the AI development knowledge on the K230 from the code level.

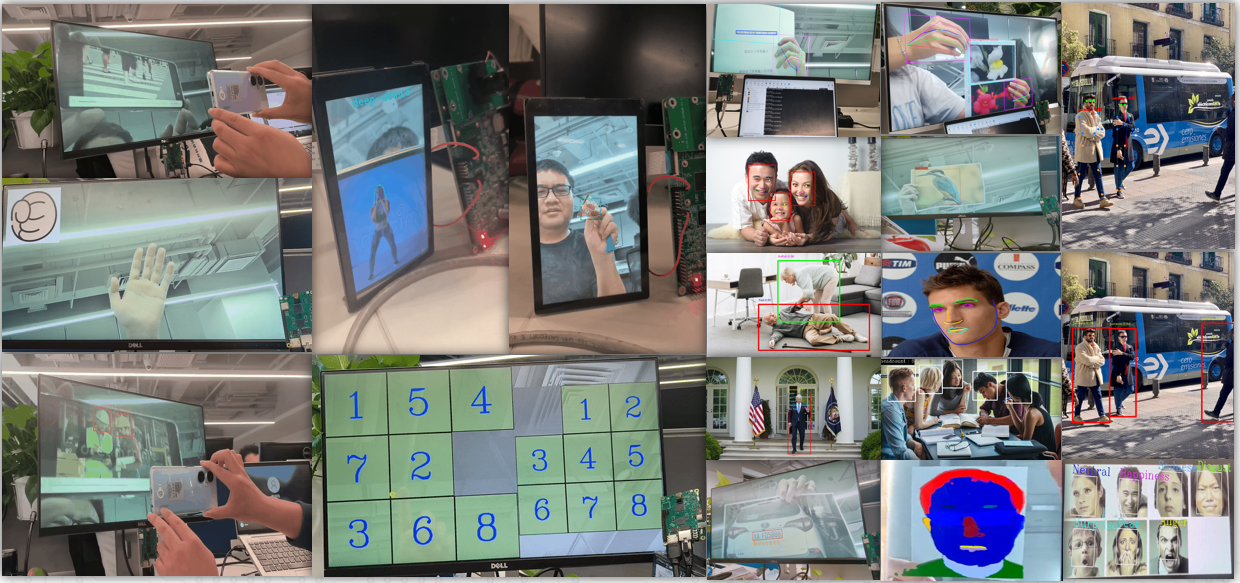

3.4. Demo Showcase#

The demo effects are shown in the following images. If interested, you can try running the demos yourself.